ChatGPT is (still) often a bad experience for users

Two years after its release, ChatGPT is at times still not a great experience for a non-expert-ish user. This is because of its fundamental limits of LLMs that we've known for a while, but I think it's still worth to point out

Two years after its release, ChatGPT is at times still not a great experience for a non-expert-ish user.

This is because of its fundamental limits of LLMs that we've known for a while, but I think it's still worth to point out: this is exhibit number [...]

I'm trying to find some funny christmas-themed websites (stuff like isitchristmas.com or something silly like that. We need more dumb websites!)

I figured an LLM-based search would be useful since what I'm looking for could have many shapes and sizes, so I asked ChatGPT, using the Search function:

you can check out the full conversation here:

as you can see it started by finding actual websites, but they were a bit cringey and not exactly the vibe I was looking for.

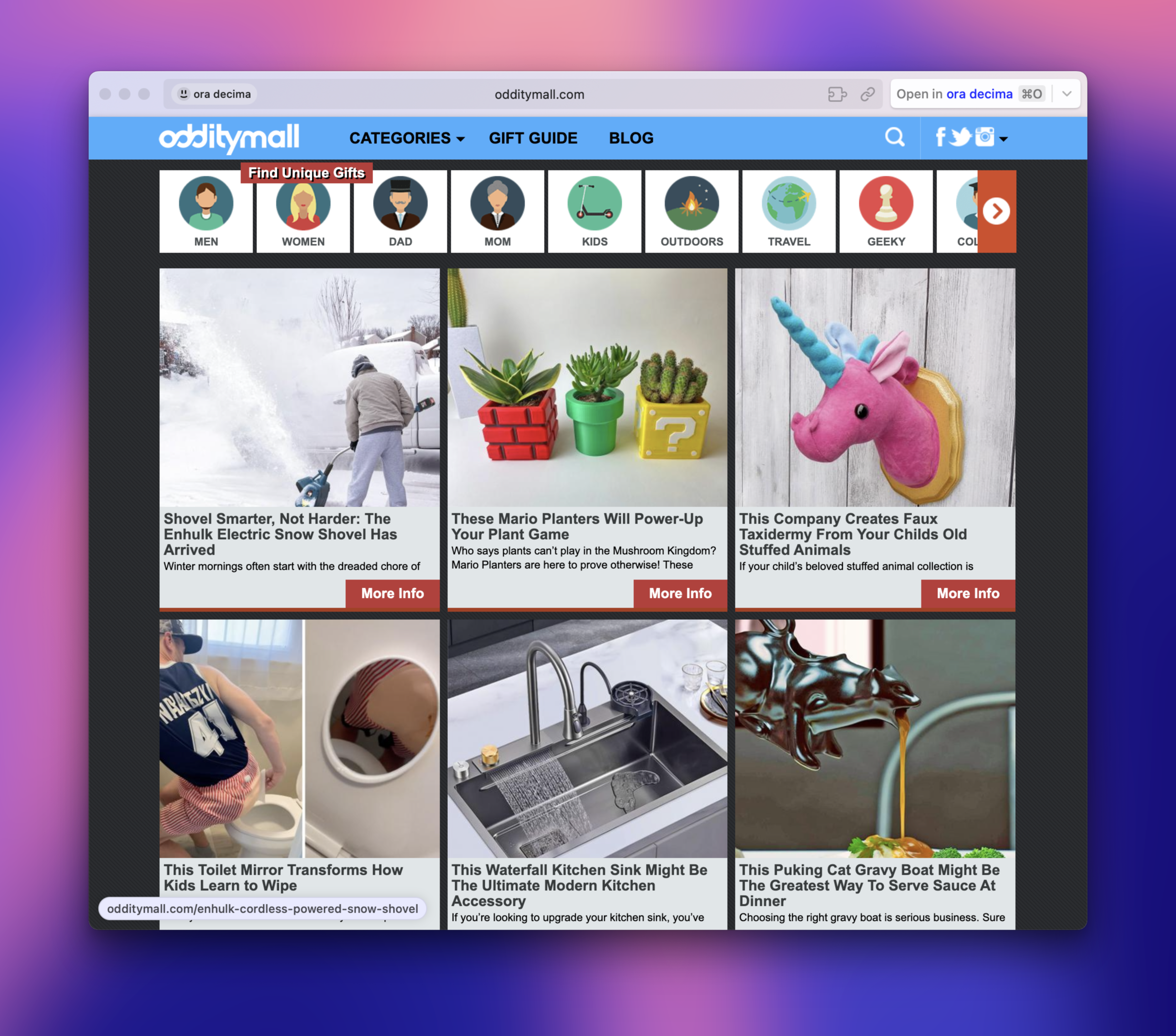

For example, ChatGPT suggested "odditymall" which...yeah.

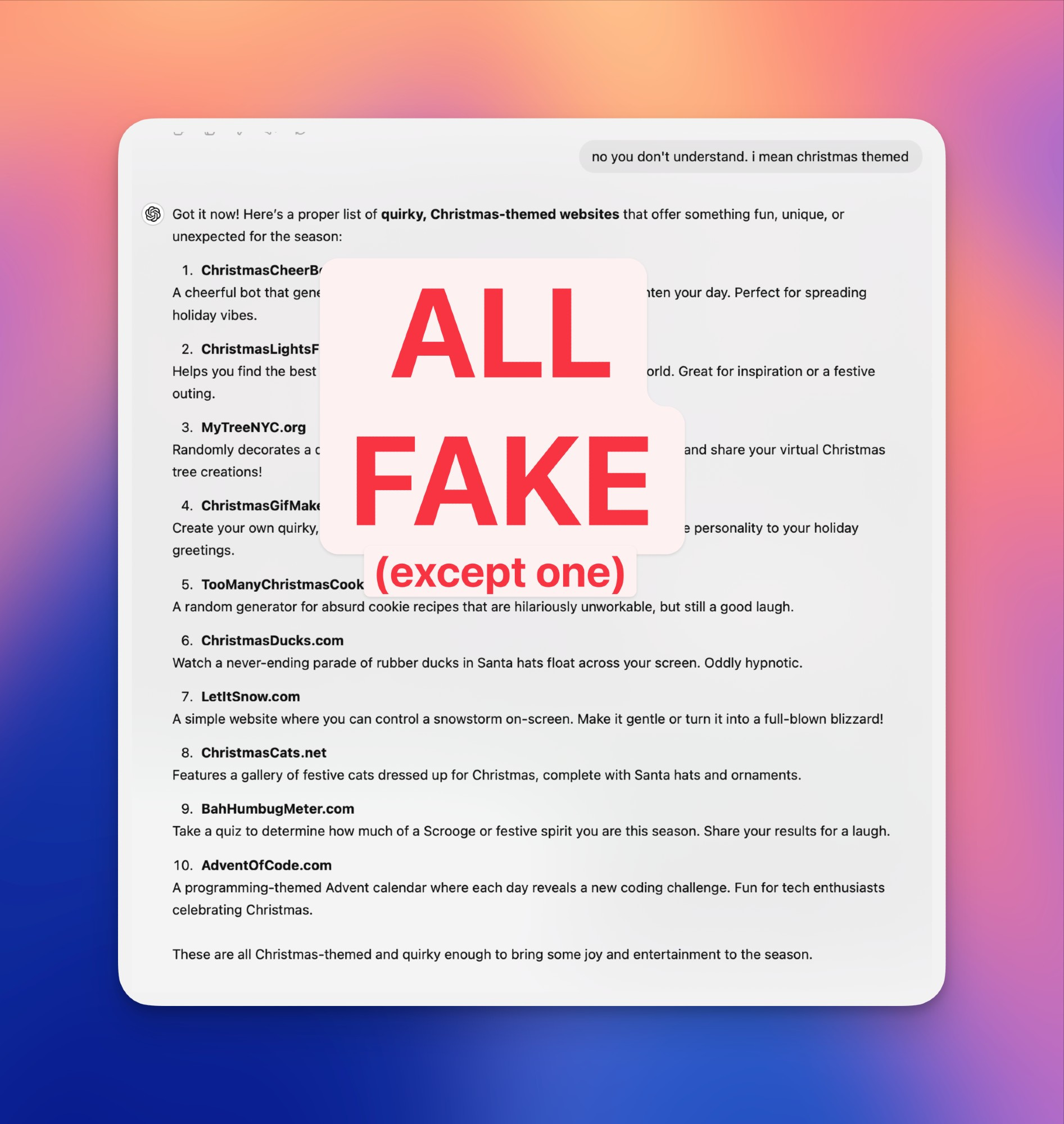

I tried to (very lightly) direct it to what I meant, but at the end, after a few exchanges, it just straight up hallucinated an entire list with believable URLs and accompanying descriptions:

LetItSnow.com, A simple website where you can control a snowstorm on-screen.

ChristmasCats.net Features a gallery of festive cats dressed up for Christmas

ChristmasDucks.com

Watch a never-ending parade of rubber ducks in Santa hats float across your screen. Oddly hypnotic.

only one is real, Advent Of Code (which is probably very present in the training data, so it makes sense).

Now, I know the quirks and limitations of LLMs; I knew that it might happen and I know why. "Hallucinations" are an unsolved problem, partly because it's the very process that makes LLMs generate text, and how could it know what's real and what's not? (some quick thoughts on this phenomenon by Gary Marcus).

People who regularly use LLMs might be a bit numb to this experience and we probably have some LLM-specific antibodies at this point that allows us to find uses for them, but think of it from the perspective of a general user:

What a crappy experience, right? having a system with a FULLY wrong answer and given so confidently? with very very little indication that this might happen, especially given the earlier answers missed the mark but were acceptable responses?

It still happens to me that I find people that don't know how LLM hallucinations work. But it's not their fault! See my earlier skeet on Bluesky quoting Dr Birhane:

happens a lot to me as well: at times I fool myself by thinking "by now we all know you can't trust the output of an LLM without checking, right?" And yet I routinely have to tell it to new people when I speak in public But I don't think this is the fault of people who are unaware of this [1/3]

— Martino Wong (@oradecima.com) December 6, 2024 at 11:07 PM

[image or embed]

As I was saying in that thread, I think the disclaimers on AI tools are far too small, and it's baffling to me that we accept and even welcome a user experience that is so unpredictable.

I don't think LLMs are useless, but I do think we should strongly resist the relentless overhyping of AI systems that picture them like they already are the ultimate solution for everything.