I can't believe Google put out another ad for AI with a mistake

Google made an ad showcasing the usefulness of Gemini and the text generated has a mistake, but somehow a Google employee says it's not a hallucination.

(And it's apparently fake as well?)

How can this happen so often?

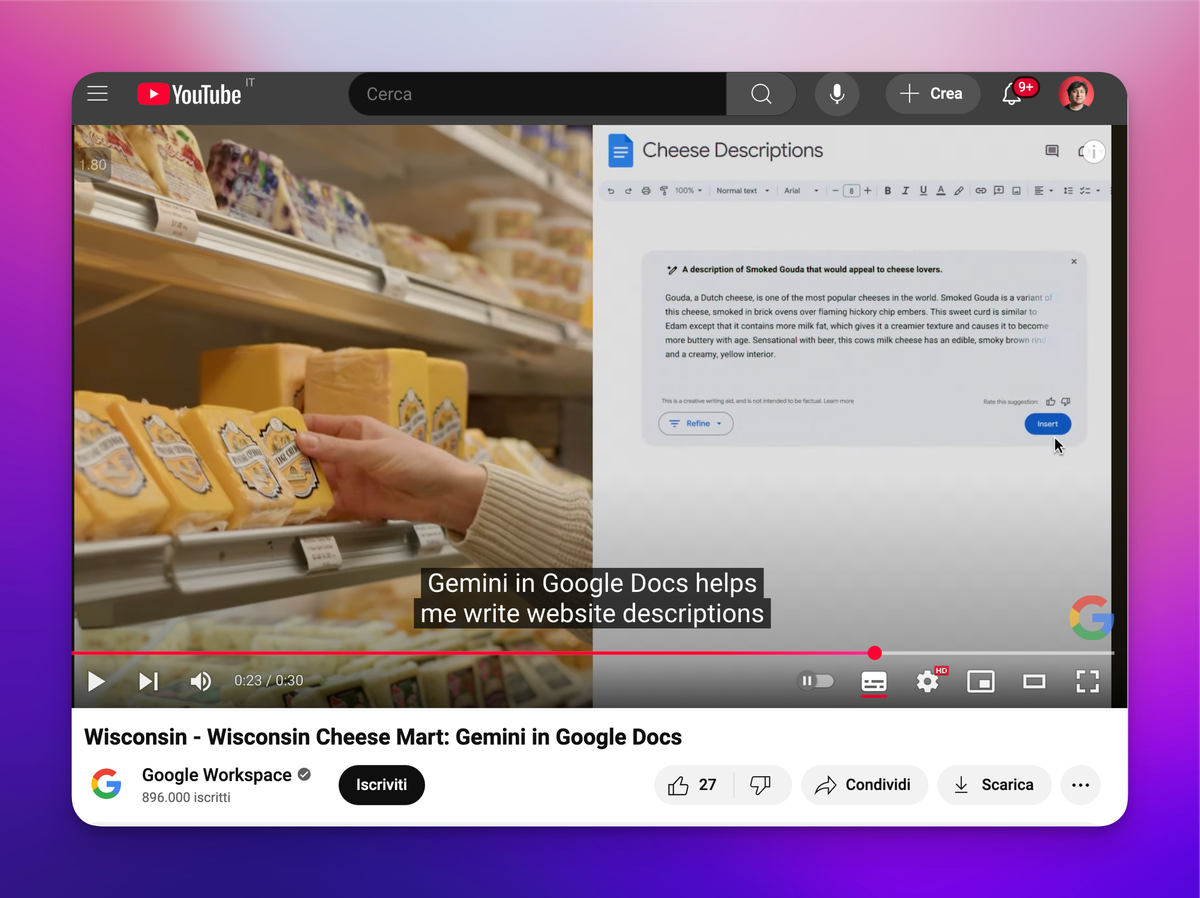

So, Google ran a series of ads for the Super Bowl showcasing its Gemini AI as useful for small businesses: the Wisconsin Cheese Mart is used as an example of a local shop who does a lot of business online and it needs a pretty website.

And what better way to have a good website than fill it with AI slop AI generated copy using Google Gemini?

(Now, I do honestly understand that there are times in which AI generated text serves a purpose, because you may want some "filler" text, however I ask myself whether it's just an artificial need that stems from a certain amount of fluff that we've long had to endure even though we could do without. But that's a thought for another time).

In any case, something that's definitely certain is that you always need to double check the output of an LLM, period.

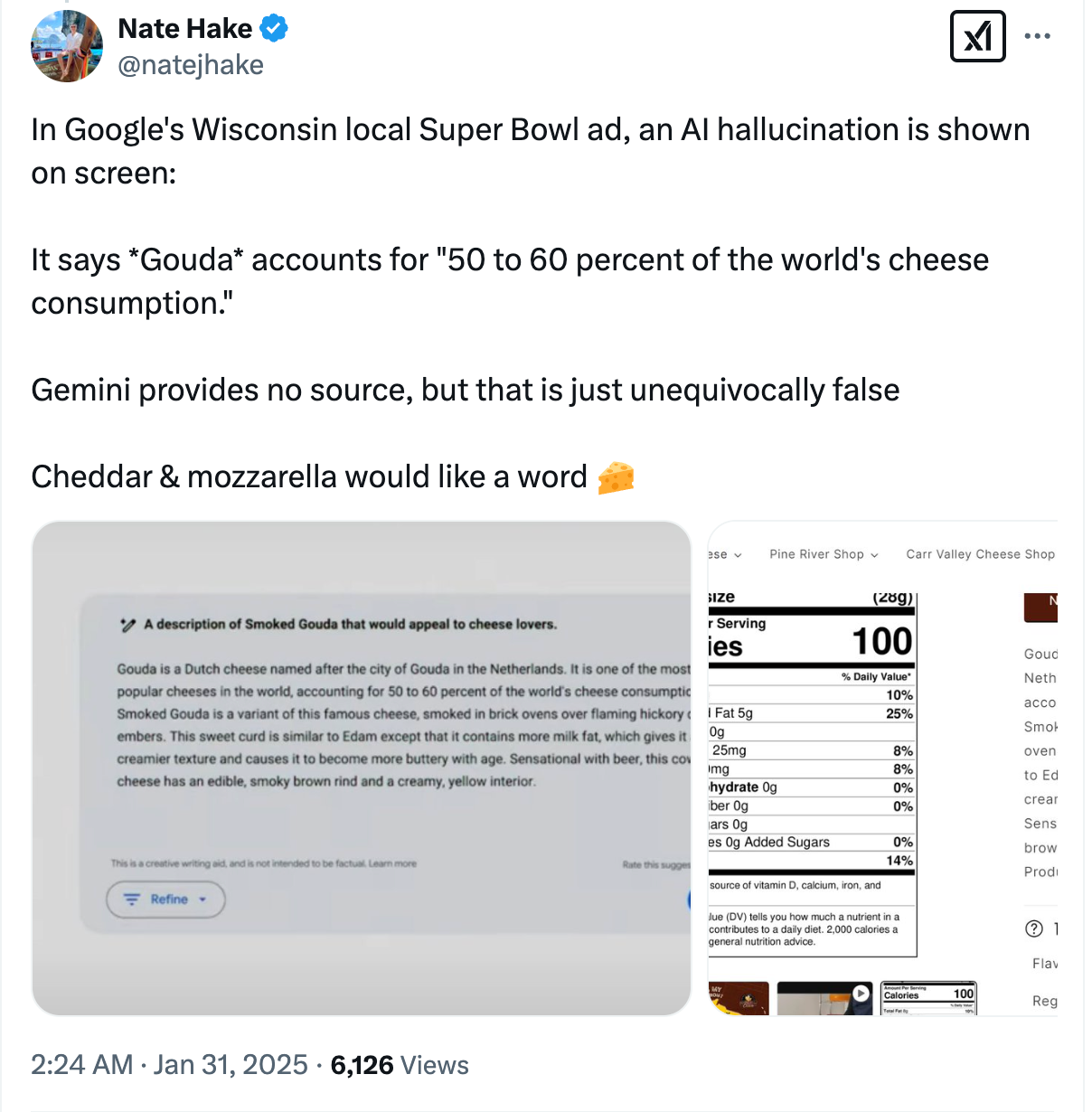

In this case, Nate Hake noticed on Twitter that in the ad Gemini generated text that said that Gouda makes up for "50 to 60 percent of the world’s cheese consumption.". Which is obviously not true.

We're 2+ years in the widespread availability of LLMs like GPT and a robust solution to "hallucinations" is nowhere to be seen.

What we interpret as "hallucinations" is part of the features of LLMs themselves, it's their very method of generating text.

As the famous "On the Dangers of Stochastic Parrots" paper by Bender et al. correctly foresaw, "coherence is in the eye of the beholder":

when LLMs are correct on some fact is inevitably partly...a coincidence.

This is not to say that LLMs are just random output, they're definitely sophisticated systems that are often correct; what I mean is that there is no intentionality nor awareness of what the reality is, which means the output needs to be checked. Always.

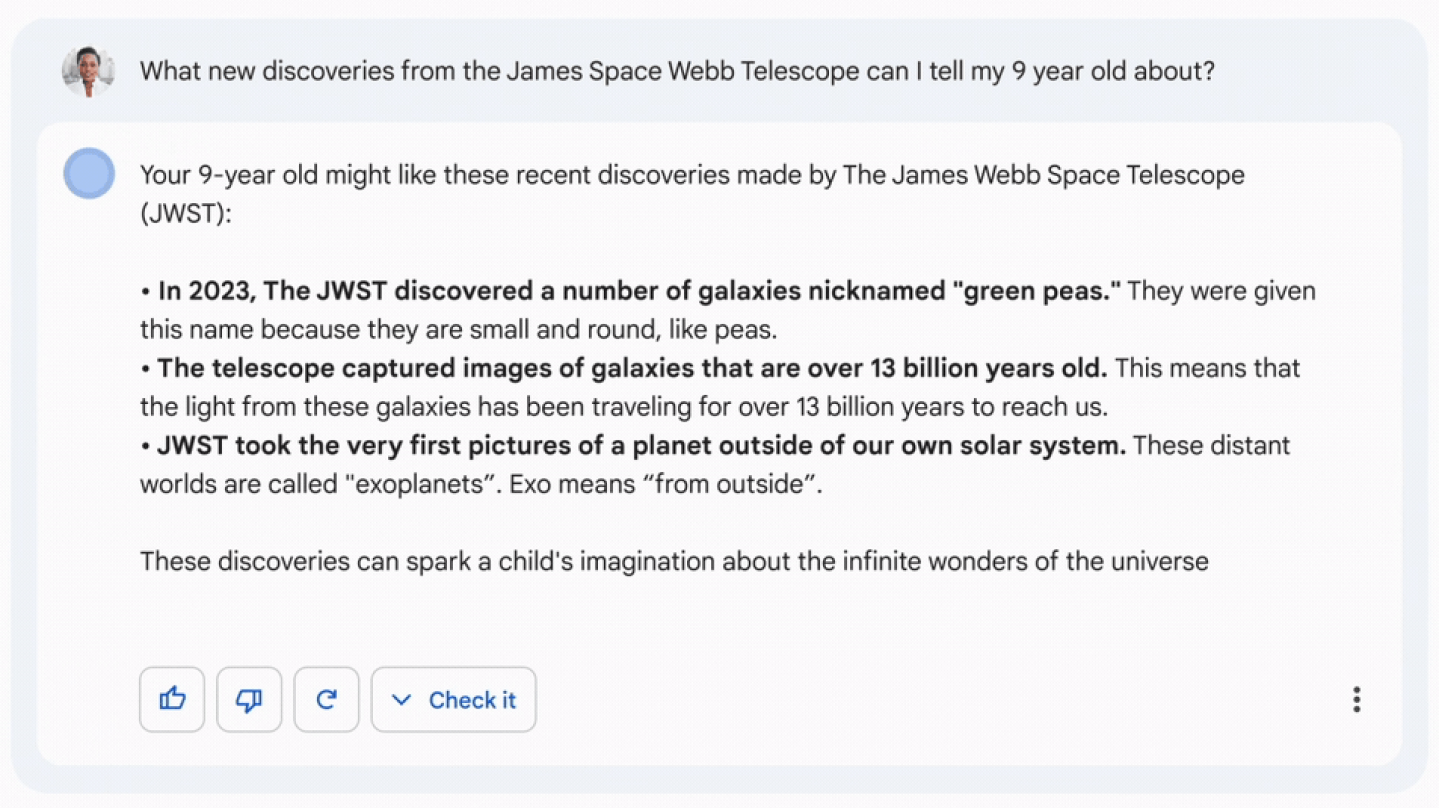

And it's incredibly funny to me that Google hasn't figured this out yet, because it's not the first time they had this happen. Remember Bard? The previous AI assistant Google had before Gemini? Its very first demo video had an error: Bard generated text that said that the James Webb Telescope took the first pictures of planets outside our solar system, which is, again, false.

Guys, come on? How can it be that "check the LLM output for errors" is not like the first thing on the agenda of an ad that millions of people will see?

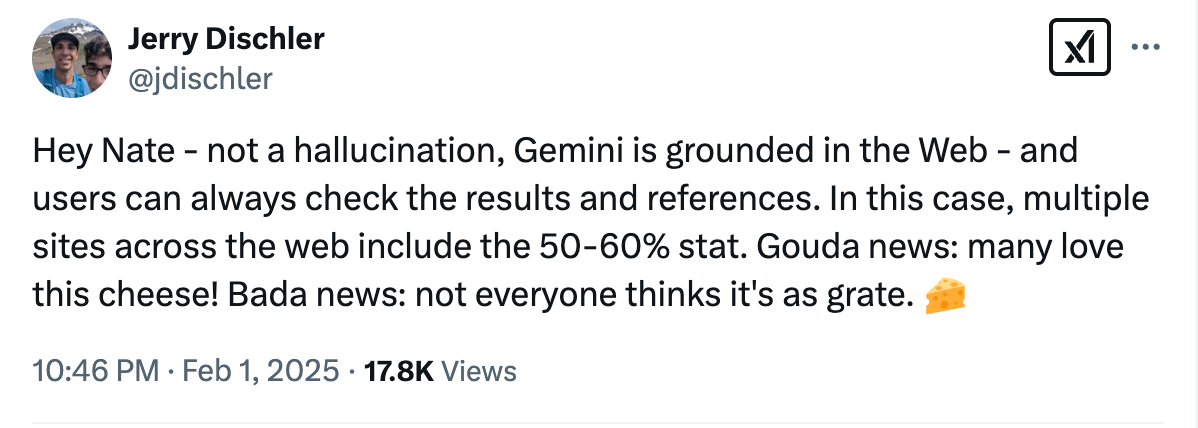

But it doesn't end here! Jerry Dischler, president of Cloud Applications at Google Cloud, answers that multiple sites across the web include this stat, so it's "not a hallucination."

This feels an incredibly naive rebuttal to me, cause it doesn't solve the issue with LLM based systems, but on the contrary it makes its shortcomings all the more apparent.

The fact that "Gemini is grounded in the Web" is precisely part of the issue: remember when Google AI Overviews suggested to eat a rock a day or to put glue in your sauce to make the cheese stick to the pizza better?

These systems are in fact perfect to perpetuate common misconceptions that get repeated a lot online, and that's exactly because they're grounded in their training data.

They allow you to remove yourself from the responsibility to actually write something and be conscious of it, but it's an illusion, because the responsibility is still there, it's still yours, and the fact that these tools make it easier to skip the check is the issue.

(By the way, Google has edited the commercial on YouTube so the mistake doesn't show anymore, obviously).

BUT!! It doesn't end there?? Emma Roth reports on The Verge that the text about Gouda has been on the website since 2020, showing the page on the ever so useful Internet Archive, so...they faked the AI? Was the text human all along??

I get that all ads have some fakery in them, it's fine, but this is somehow quite an even funnier conclusion.